How does the world reach limits? This is a question that few dare to examine. My analysis suggests that these limits will come in a very different way than most have expectedâ€"through financial stress that ultimately relates to rising unit energy costs, plus the need to use increasing amounts of energy for additional purposes:

- To extract oil and other minerals from locations where extraction is very difficult, such as in shale formations, or very deep under the sea;

- To mitigate water shortages and pollution issues, using processes such as desalination and long distance transport of food; and

- To attempt to reduce future fossil fuel use, by building devices such as solar panels and electric cars that increase fossil fuel energy use now in the hope of reducing energy use later.

We have long known that the world is likely to eventually reach limits. In 1972, the book The Limits to Growth by Donella Meadows and others modeled the likely impact of growing population, limited resources, and rising pollution in a finite world. They considered a number of scenarios under a range of different assumptions. These models strongly suggested the world economy would begin to hit limits in the first half of the 21st century and would eventually collapse.

The indications of the 1972 analysis were considered nonsense by most. Clearly, the world would work its way around limits of the type suggested. The world would find additional resources in short supply. It would become more efficient at using resources and would tackle the problem of rising pollution. The free market would handle any problems that might arise.

The Limits to Growth analysis modeled the world economy in terms of flows; it did not try to model the financial system. In recent years, I have been looking at the situation and have discovered that as we hit limits in a finite world, the financial system is the most vulnerable part because of the system because it ties everything else together. Debt in particular is vulnerable because the time-shifting aspect of debt “works†much better in a rapidly growing economy than in an economy that is barely growing or shrinking.

The problem that now looks like it has the potential to push the world into financial collapse is something no one would have thought ofâ€"high oil prices that take a slice out of the economy, without anything to show in return. Consumers find that their own salaries do not rise as oil prices rise. They find that they need to cut back on discretionary spending if they are to have adequate funds to pay for necessities produced using oil. Food is one such necessity; oil is used to run farm equipment, make herbicides and pesticides, and transport finished food products. The result of a cutback in discretionary spending is recession or near recession, and less job availability. Governments find themselves in  financial distress from trying to mitigate the recession-like impacts without adequate tax revenue.

One of our big problems now is a lack of cheap substitutes for oil. Highly touted renewable energy sources such as wind and solar PV are not cheap. They also do not substitute directly for oil, and they increase near-term fossil fuel consumption. Ethanol can act as an “oil extender,†but it is not cheap. Battery powered cars are also not cheap.

The issue of rising oil prices is really a two-sided issue. The least expensive sources of oil tend to be extracted first. Thus, the cost of producing oil tends to rise over time. As a result, oil producers tend to require ever-rising oil prices to cover their costs. It is the interaction of these two forces that leads to the likelihood of financial collapse in the near term:

- Need for ever-rising oil prices by oil producers.

- The adverse impact of high-energy prices on consumers.

If a cheap substitute for oil had already come along in adequate quantity, there would be no problem. The issue is that no suitable substitute has been found, and financial problems are here already. In fact, collapse may very well come from oil prices not rising high enough to satisfy the needs of those extracting the oil, because of worldwide recession.

The Role of Inexpensive Energy

The fact that few stop to realize is that energy of the right type is absolutely essential for making goods and services of all kinds. Â Even if the services are simply typing numbers into a computer, we need energy of precisely the right kind for several different purposes:

- To make the computer and transport it to the current location.

- To build the building where the worker works.

- To light the building where the worker works.

- To heat or cool the building where the worker works.

- To transport the worker to the location where he works.

- To produce the foods that the worker eats.

- To produce the clothing that the worker wears.

Furthermore, the energy used needs to be inexpensive, for many reasonsâ€"so that the worker’s salary goes farther; so that the goods or services created are competitive in a world market; and so that governments can gain adequate tax revenue from taxing energy products. We don’t think of fossil fuel energy products as being a significant source of tax revenue, but they very often are, especially for exporters (Rodgers map of oil “government take†percentages).

Some of the energy listed above is paid for by the employer; some is paid for by the employee. This difference is irrelevant, since all are equally essential. Some energy is omitted from the above list, but is still very important. Energy to build roads, electric transmission lines, schools, and health care centers is essential if the current system is to be maintained. If energy prices rise, taxes and fees to pay for basic services such as these will likely need to rise.

How “Growth†Began

For most primates, such as chimpanzees and gorillas, the number of the species fluctuates up and down within a range. Total population isn’t very high. If human population followed that of other large primates, there wouldn’t be more than a few million humans worldwide. They would likely live in one geographical area.

How did humans venture out of this mold? In my view, a likely way that humans were able to improve their dominance over other animals and plants was through the controlled use of fire, a skill they learned over one million years ago (Luke 2012). Controlled use of fire could be used for many purposes, including cooking food, providing heat in cool weather, and scaring away wild animals.

The earliest use of fire was in some sense very inexpensive. Dry sticks and leaves were close at hand. If humans used a technique such as twirling one stick against another with the right technique and the right kind of wood, such a fire could be made in less than a minute (Hough 1890). Once humans had discovered how to make fire, they could it to leverage their meager muscular strength.

The benefits of the controlled use of fire are perhaps not as obvious to us as they would have been to the early users. When it became possible to cook food, a much wider variety of potential foodstuffs could be eaten. The nutrition from food was also better. There is even some evidence that cooking food allowed the human body to evolve in the direction of smaller chewing and digestive apparatus and a bigger brain (Wrangham 2009). A bigger brain would allow humans to outsmart their prey. (Dilworth 2010)

Cooking food allowed humans to spend much less time chewing food than previouslyâ€"only one-tenth as much time according to one study (4.7% of daily activity vs. 48% of daily activity) (Organ et al. 2011). The reduction in chewing time left more time other activities, such as making tools and clothing.

Humans gradually increased their control over many additional energy sources. Training dogs to help in hunting came very early. Humans learned to make sailboats using wind energy. They learned to domesticate plants and animals, so that they could provide more food energy in the location where it was needed. Domesticated animals could also be used to pull loads.

Humans learned to use wind mills and water mills made from wood, and eventually learned to use coal, petroleum (also called oil), natural gas, and uranium. The availability of fossil fuels vastly increased our ability to make substances that require heating, including metals, glass, and concrete. Prior to this time, wood had been used as an energy source, leading to widespread deforestation.

With the availability of metals, glass, and concrete in quantity, it became possible to develop modern hydroelectric power plants and transmission lines to transmit this electricity. It also became possible to build railroads, steam-powered ships, better plows, and many other useful devices.

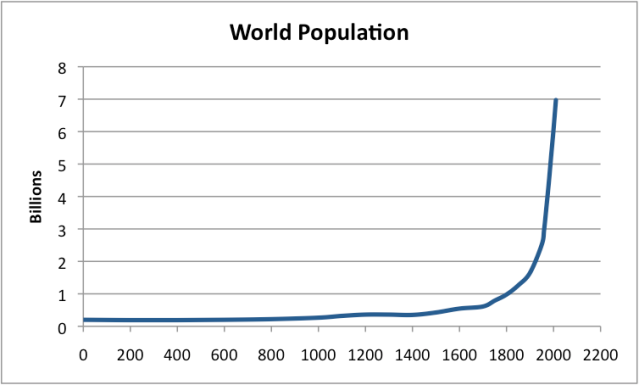

Population rose dramatically after fossil fuels were added, enabling better food production and transportation. This started about 1800.

Figure 1. World population based on data from “Atlas of World History,†McEvedy and Jones, Penguin Reference Books, 1978 and UN Population Estimates.Â

All of these activities led to a very long history of what we today might call economic growth. Prior to the availability of fossil fuels, the majority of this growth was in population, rather than a major change in living standards. (The population was still very low compared to today.) In later years, increased energy use was still associated with increased population, but it was also associated with an increase in creature comfortsâ€"bigger homes, better transportation, heating and cooling of homes, and greater availability of services like education, medicine, and financial services.

How Cheap Energy and Technology Combine to Lead to Economic Growth

Without external energy, all we have is the energy from our own bodies. We can perhaps leverage this energy a bit by picking up a stick and using it to hit something, or by picking up a rock and throwing it. In total, this leveraging of our own energy doesn’t get us very farâ€"many animals do the same thing. Such tools provide some leverage, but they are not quite enough.

The next step up in leverage comes if we can find some sort of external energy to use to supplement our own energy when making goods and services.  One example might be heat from a fire built with sticks used for baking bread; another example might be energy from an animal pulling a cart. This additional energy can’t take too much of (1) our human energy, (2) resources from the ground, or (3) financial capital, or we will have little to invest what we really wantâ€"technology that gives us the many goods we use, and services such as education, health care, and recreation.

The use of inexpensive energy led to a positive feedback loop: the value of the goods and service produced was sufficient to produce a profit when all costs were considered, thanks to the inexpensive cost of the energy used. This profit allowed additional investment, and contributed to further energy development and further growth. This profit also often led to rising salaries. The additional cheap energy use combined with greater technology produced the impression that humans were becoming more “productive.â€

For a very long time, we were able to ramp up the amount of energy we used, worldwide. There were many civilizations that collapsed along the way, but in total, for all civilizations in the world combined, energy consumption, population, and goods and services produced tended to rise over time.

In the 1970s, we had our first experience with oil limits. US oil production started dropping in 1971. The drop in oil production set us up as easy prey for an oil embargo in 1973-1974, and oil prices spiked. We got around this problem, and more high price problems in the late 1970s by

- Starting work on new inexpensive oil production in the North Sea, Alaska, and Mexico.

- Adopting more fuel-efficient cars, already available in Japan.

- Switching from oil to nuclear or coal for electricity production.

- Cutting back on oil intensive activities, such as building new roads and doing heavy manufacturing in the United States.

The economy eventually more or less recovered, but men’s wages stagnated, and women found a need to join the workforce to maintain the standards of living of their families.  Oil prices dropped back, but not quite a far as to prior level. The lack of energy intensive industries (powered by cheap oil) likely contributed to the stagnation of wages for men.

Recently, since about 2004, we have again been encountering high oil prices. Unfortunately, the easy options to fix them are mostly gone. We have run out of cheap energy optionsâ€"tight oil from shale formations isn’t cheap. Wages again are stagnating, even worse than before. The positive feedback loop based on low energy prices that we had been experiencing when oil prices were low isn’t working nearly as well, and economic growth rates are falling.

The technical name for the problem we are running into with oil is diminishing marginal returns. This represents a situation where more and more inputs are used in extraction, but these additional inputs add very little more in the way of the desired output, which is oil. Oil companies find that an investment of a given amount, say $1,000 dollars, yields a much smaller amount of oil than it used to in the pastâ€"often less than a fourth as much. There are often more up-front expenses in drilling the wells, and less certainty about the length of time that oil can be extracted from a new well.

Oil that requires high up-front investment needs a high price to justify its extraction. When consumers pay the high oil price, the amount they have for discretionary goods drops. The feedback loop starts working the wrong directionâ€"in the direction of more layoffs, and lower wages for those working. Companies, including oil companies, have a harder time making a profit. They find outsourcing labor costs to lower-cost parts of the world more attractive.

Can this Growth Continue Indefinitely?

Even apart from the oil price problem, there are other reasons to think that growth cannot continue indefinitely in a finite world. For one thing, we are already running short of fresh water in many parts of the world, including China, India and the Middle East.  In addition, if population continues to rise, we will need a way to feed all of these peopleâ€"either more arable land, or a way of getting more food per acre.

Pollution is another issue. One type is acidification of oceans; another leads to dead zones in oceans. Mercury pollution is a widespread problem. Fresh water that is available is often very polluted. Excess carbon dioxide in the atmosphere leads to concerns about climate change.

There is also an issue with humans crowding out other species. In the past, there have been five widespread die-offs of species, called “Mass Extinctions.†Humans seem now to be causing a Sixth Mass Extinction. Paleontologist Niles Eldredge describes the Sixth Mass Extinction as follows:

- Phase One began when first humans began to disperse to different parts of the world about 100,000 years ago. [We were still hunter-gatherers at that point, but we killed off large species for food as we went.]

- Phase Two began about 10,000 years ago, when humans turned to agriculture.

According to Eldredge, once we turned to agriculture, we stopped living within local ecosystems. We converted land to produce only one or two crops, and classified all unwanted species as “weedsâ€. Now with fossil fuels, we are bringing our attack on other species to a new higher level. For example, there is greater clearing of land for agriculture, overfishing, and too much forest use by humans (Eldredge 2005).

In many ways, the pattern of human population growth and growth of use of resources by humans are like a cancer. Growth has to stop for one reason or otherâ€"smothering other species, depletion of resources, or pollution.

Many Competing Wrong Diagnoses of our Current Problem

The problem we are running into now is not an easy one to figure out because the problem crosses many disciplines. Is it a financial problem? Or a climate change problem? Or an oil depletion problem? It is hard to find individuals with knowledge across a range of fields.

There is also a strong bias against really understanding the problem, if the answer appears to be in the “very bad to truly awful†range. Politicians want a problem that is easily solvable. So do sustainability folks, and peak oil folks, and people writing academic papers. Those selling newspapers want answers that will please their advertisers. Academic book publishers want books that won’t scare potential buyers.

Another issue is that nature works on a flow basis. All we have in a given year in terms of resources is what we pull out in that year. If we use more resources for one thingâ€"extracting oil, or making solar panels, it leaves less for other purposes. Consumers also work mostly from the income from their current paychecks. Even if we come up with what looks like wonderful solutions, in terms of an investment now for payback later, nature and consumers aren’t very co-operative in producing them. Consumers need ever-more debt, to make the solutions sort of work. If one necessary resourceâ€"cheap oilâ€"is in short supply, nature dictates that other resource uses shrink, to work within available balances. So there is more pressure toward collapse.

Virtually no one understands our complex problem. As a result, we end up with all kinds of stories about how we can fix our problem, none of which make sense:

“Humans don’t need fossil fuels; we can just walk away.†â€" But how do we feed 7 billion people? How long would our forests last before they are used for fuel?

“More wind and solar PV†â€" But these use fossil fuels now, and don’t fix oil prices.

“Climate change is our only problem.â€â€"Climate change needs to be considered in conjunction with other limits, many of which are hitting very soon. Maybe there is good news about climate, but it likely will be more than offset by bad news from limits not considered in the model.